Robust Pupil Detection and Gaze Estimation

The reliable estimation of the pupil position in eye images is perhaps the most important prerequisite in gaze-based HMI applications.

While there are many approaches that enable accurate pupil tracking under laboratory conditions, tracking the pupil in real-world images is highly challenging due to changes in illumination, reflections on glasses or on the eyeball, off-axis camera position, contact lenses, and many more.

Description 500,000 segmented images

Human gaze behavior is not the only important aspect about eye tracking. The eyelids reveal additional important information; such as fatigue as well as the pupil size holds indications of the workload. The current state-of-the-art datasets focus on challenges in pupil center detection, whereas other aspects, such as the lid closure and pupil size, are neglected. Therefore, we propose a fully convolutional neural network for pupil and eyelid segmentation as well as eyelid landmark and pupil ellipsis regression. The network is jointly trained using the Log loss for segmentation and L1 loss for landmark and ellipsis regression. The application of the proposed network is the offline processing and creation of datasets. Which can be used to train resource-saving and real-time machine learning algorithms such as random forests. In addition, we will provide the worlds largest eye images dataset with more than 500,000 images.

Description Cycle GAN

Eye tracking is increasingly influencing scientific areas such as psychology, cognitive science, and human-computer interaction. Many eye trackers output the gaze location and the pupil center. However, other valuable information can also be extracted from the eyelids, such as the fatigue of a person. We evaluated Generative Adversarial Networks (GAN) for eyelid and pupil area segmentation, data generation, and image refinement. While the segmentation GAN performs the desired task, the others serve as supportive Networks. The trained data generation GAN does not require simulated data to increase the dataset, it simply uses existing data and creates subsets. The purpose of the refinement GAN, in contrast, is to simplify manual annotation by removing noise and occlusion in an image without changing the eye structure and pupil position. In addition 100,000 pupil and eyelid segmentations are made publicly available for images from the labeled pupils in the wild data set. These will support further research in this area.

Description TINY CNN

In this work, we compare the use of convolution, binary, and decision tree layers in neural networks for the estimation of pupil landmarks. These landmarks are used for the computation of the pupil ellipse and have proven to be effective in previous research. The evaluated structure of the neural networks is the same for all layers and as small as possible to ensure a real-time application. The evaluations include the accuracy of the ellipse determination based on the Jaccard Index and the pupil center. Furthermore, the CPU runtime is considered to make statements about the real-time usability. The trained models are also optimized using pruning to improve the runtime. These optimized nets are also evaluated with respect to the Jaccard index and the accuracy of the pupil center estimation.

Description MAM

Accurate point detection on image data is an important task for many applications, such as in robot perception, scene understanding, gaze point regression in eye tracking, head pose estimation, or object outline estimation. In addition, it can be beneficial for various object detection tasks where minimal bounding boxes are searched and the method can be applied to each corner. We propose a novel self training method, Multiple Annotation Maturation (MAM) that enables fully automatic labeling of large amounts of image data. Moreover, MAM produces detectors, which can be used online afterward. We evaluated our algorithm on data from different detection tasks for eye, pupil center (head mounted and remote), and eyelid outline point and compared the performance to the state-of-the-art. The evaluation was done on over 300,000 images, and our method shows outstanding adaptability and robustness. In addition, we contribute a new dataset with more than 16,200 accurate manually-labeled images from the remote eyelid, pupil center, and pupil outline detection. This dataset was recorded in a prototype car interior equipped with all standard tools, posing various challenges to object detection such as reflections, occlusion from steering wheel movement, or large head movements.

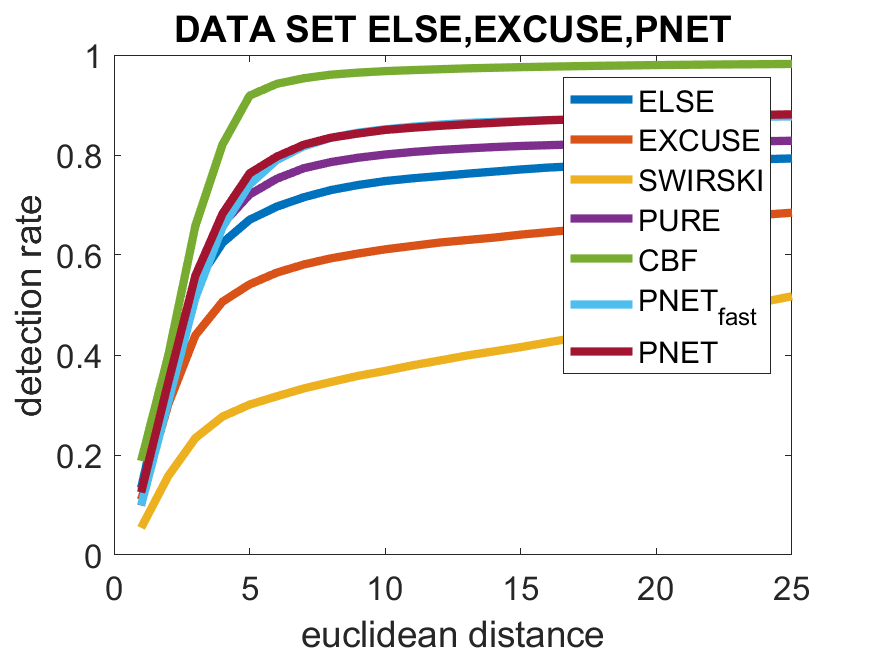

Description CBF

Modern eye tracking systems rely on fast and robust pupil detection, and several algorithms have been proposed for eye tracking under real world conditions. In this work, we propose a novel binary feature selection approach that is trained by computing conditional distributions. These features are scalable and rotatable, allowing for distinct image resolutions, and consist of simple intensity comparisons, making the approach robust to different illumination conditions as well as rapid illumination changes. The proposed method was evaluated on multiple publicly available data sets, considerably outperforming state-of-the-art methods, and being real-time capable for very high frame rates. Moreover, our method is designed to be able to sustain pupil center estimation even when typical edge-detection- based approaches fail – e.g., when the pupil outline is not visible due to occlusions from reflections or eyelids / lashes. As a consequence, it does not attempt to provide an estimate for the pupil outline. Nevertheless, the pupil center suffices for gaze estimation – e.g., by regressing the relationship between pupil center and gaze point during calibration.

Description BORE

Undoubtedly, eye movements contain an immense amount of information, especially when looking to fast eye movements, namely time to the fixation, saccade, and microsaccade events. While, modern cameras support recording of few thousand frames per second, to date, the majority of studies use eye trackers with the frame rates of about 120 Hz for head-mounted and 250 Hz for remote-based trackers. In this study, we aim to overcome the challenge of the pupil tracking algorithms to perform real time with high speed cameras for remote eye tracking applications. We propose an iterative pupil center detection algorithm formulated as an optimization problem. We evaluated our algorithm on more than 13,000 eye images, in which it outperforms earlier solutions both with regard to runtime and detection accuracy. Moreover, our system is capable of boosting its runtime in an unsupervised manner, thus we remove the need for manual annotation of pupil images.

Description PUREST

Pervasive eye-tracking applications such as gaze-based human computer interaction and advanced driver assistance require real-time, accurate, and robust pupil detection. However, automated pupil detection has proved to be an intricate task in real-world scenarios due to a large mixture of challenges – for instance, quickly changing illumination and occlusions. In this work, we introduce the Pupil Reconstructor with Subsequent Tracking (PuReST), a novel method for fast and robust pupil tracking. The proposed method was evaluated on over 266,000 realistic and challenging images acquired with three distinct head-mounted eye tracking devices, increasing pupil detection rate by 5.44 and 29.92 percentage points while reducing average run time by a factor of 2.74 and 1.1. w.r.t. state-of-the-art 1) pupil detectors and 2) vendor provided pupil trackers, respectively. Overall, PuReST outperformed other methods in 81.82% of use cases.

Description PURE

Real-time, accurate, and robust pupil detection is an essential prerequisite to enable pervasive eye- -tracking and its applications – e.g., gaze-based human computer interaction, health monitoring, foveated rendering, and advanced driver assistance. However, automated pupil detection has proved to be an intricate task in real-world scenarios due to a large mixture of challenges such as quickly changing illumination and occlusions. In this paper, we introduce the Pupil Reconstructor (PuRe), a method for pupil detection in pervasive scenarios based on a novel edge segment selection and conditional segment combination schemes; the method also includes a confidence measure for the detected pupil. The proposed method was evaluated on over 316,000 images acquired with four distinct head– mounted eye tracking devices. Results show a pupil detection rate improvement of over 10 percentage points w.r.t. state-of-the-art algorithms in the two most challenging data sets (6.46 for all data sets), further pushing the envelope for pupil detection. Moreover, we advance the evaluation protocol of pupil detection algorithms by also considering eye images in which pupils are not present and contributing a new data set of mostly closed eyes images. In this aspect, PuRe improved precision and specificity w.r.t. state-of-the-art algorithms by 25.05 and 10.94 percentage points, respectively, demonstrating the meaningfulness of PuRe’s confidence measure. PuRe operates in real-time for modern eye trackers (at 120 fps) and is fully integrated into EyeRecToo – an open-source state-of-the-art software for pervasive head-mounted eye tracking.

Description ElSe

The input to the algorithm is a grayscale image. After normalization, a Canny edge filter is applied to the image. In the next algorithmic step, edge connections that could impair the surrounding edge of the pupil are removed. Afterwards, connected edges are collected and evaluated based on straightness, intensity value, elliptic properties, the possibility to fit an ellipse to it, and a pupil plausibility check. If a valid ellipse describing the pupil is found, it is returned as the result. In case no ellipse is found, a second analysis is conducted. To speed up the convolution with the surface difference and mean filter the image is down scaled. This operation is performed by calculating a histogram for all pixels from the large image influencing the pixel in the down scaled image. In each histogram, the mean of all intensity values up to the mean of the histogram is calculated and used as a value for the pixel in the down scaled image. After applying the surface difference and mean filter to the rescaled image, the best position is selected by multiplying the result of both filters and selecting the maximum position. Choosing a pixel position in the down scaled image leads to a distance error of the pupil center in the full scale image. Therefore, the position has to be optimized on the full scale image based on an analysis of the surrounding pixels of the chosen position.

Description ExCuSe

The input to the algorithm is a grayscale image. In the first step, the color histogram is analyzed and the algorithm decides if he expects the pupil to be bright or dark. For bright pupils, the algorithm selects the curved edge with the darkest enclosed intensity value. This is done by refining the result of the canny edge filtered image with morphological operations. The remaining edges are analyzed if they are curved or straight. For the curved edges, the enclosed intesity value is calculated, and the best is selected. If the algorithm expects the pupil to be dark, a threshold based on the standard deviation is calculated. The image gets thresholded and a coarse positioning is performed similarly to the radon transformation. This coarse position is optimized by moving to near intensity values, which are darker or equal dark to their neigborhood. After the optimization, the image is thresholded by an increased threshold. All edges which are too far from pixels under the threshold are removed. The remaining edges get filtered as described before for the bright pupil. Starting from the optimized position the surrounding edges are used to fit an ellipse.

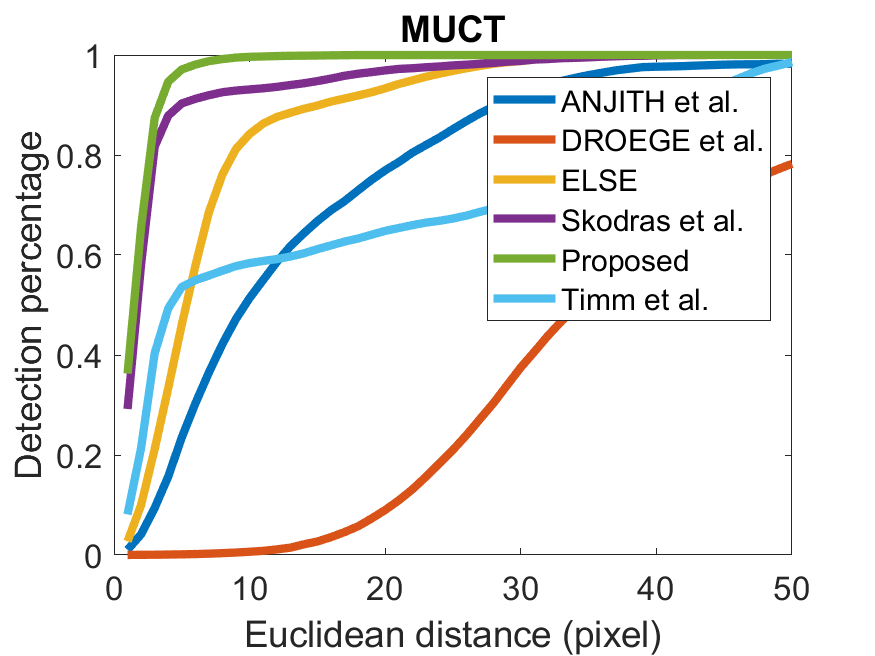

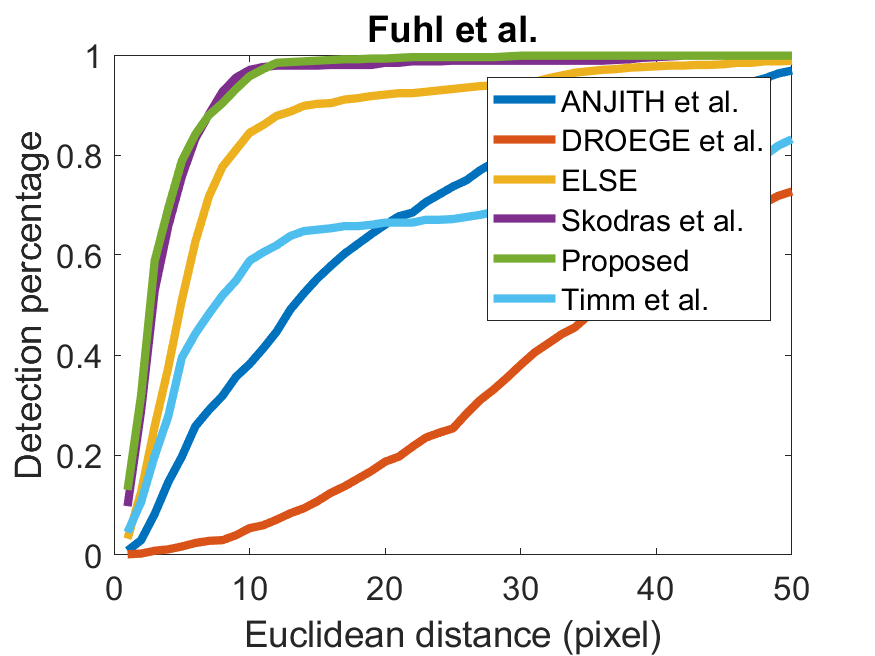

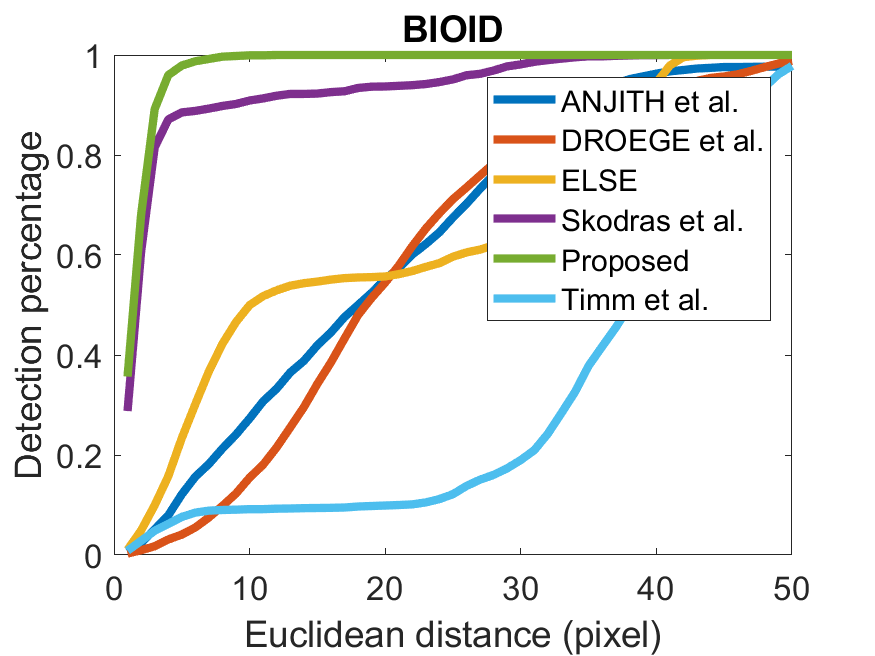

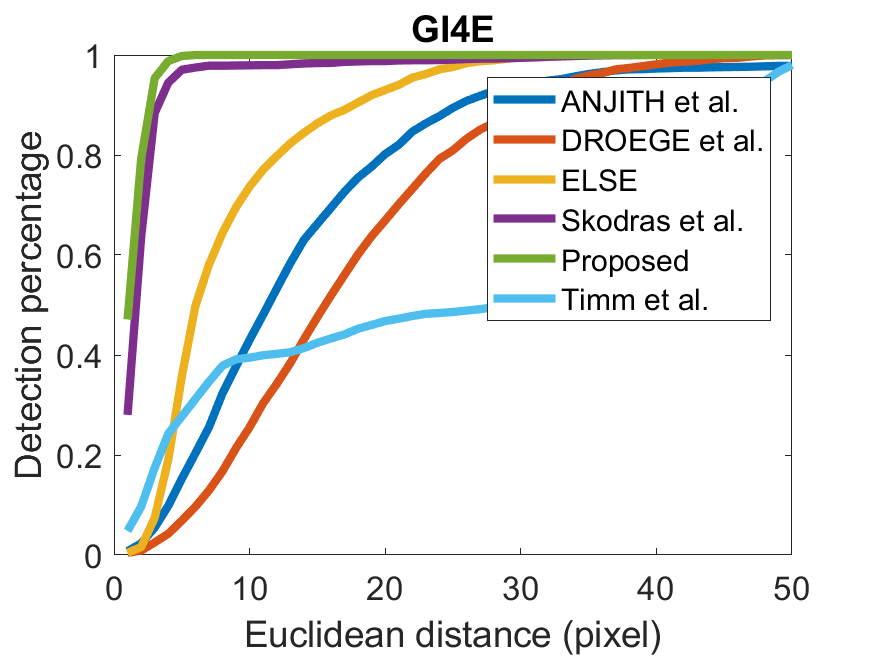

Results

Results remote (Proposed = BORE)

Results microscope

Sourcecode

- ExCuSe C++ code

- ExCuSe Matlab code

- ElSe C++ code (morphologic split)

- ElSe C++ code (algorithmic split)

- ElSe C++ code (morphologic split adjustable validity threshold for blink rejection)

- ElSe C++ code (algorithmic split adjustable validity threshold for blink rejection)

- ElSe C++ code (remote configuration)

- Reimplementation George [Link]

- Reimplementation Timm [Link]

- Reimplementation Droege [Link]

- Pupil detection through a ocular from a microscope

- CBF C++ lib

- BORE C++ lib

- MAM C++ lib

Example results