Eye Movements Identification

Approaches for segmentation and synthesis of eye-tracking data using different neural networks and machine learning approaches.

We also use these networks for reconstruction, and in conjunction with a variational auto-encoder to generate eye movement data. The first improvement of our approach is that no input window is necessary, due to the use of fully convolutional networks and therefore any input size can be processed directly. The second improvement is that the used and generated data is raw eye tracking data (position X, Y and time) without preprocessing. This is achieved by pre-initializing the filters in the first layer and by building the input tensor along the z axis. We evaluated our approach on three publicly available datasets and compare the results to the state-of-the-art.

Eye movement detection and simulation

Eye movements hold information about human perception, intention, and cognitive state. Various algorithms have been proposed to identify and distinguish eye movements, particularly fixations, saccades, and smooth pursuits. A major drawback of existing algorithms is that they rely on accurate and constant sampling rates, impeding straightforward adaptation to new movements such as microsaccades. We propose a novel eye movement simulator that i) probabilistically simulates saccade movements as gamma distributions considering different peak velocities and ii) models smooth pursuit onsets with the sigmoid function. Additionally, it is capable of producing velocity and two-dimensional gaze sequences for static and dynamic scenes using saliency maps or real fixation targets. Our approach is also capable of simulating any sampling rate, even with fluctuations. The simulation is evaluated against publicly available real data using a squared error.

Description Histogram of oriented velocities

Research in various fields including psychology, cognition, and medical science deal with eye tracking data to extract information about the intention and cognitive state of a subject. For the extraction of this information, the detection of eye movement types is an important task. Modern eye tracking data is noisy and most of the state-of-the- art algorithms are not developed for all types of eye movements since they are still under research. We propose a novel feature for eye movement detection, which is called histogram of oriented velocities. The construction of the feature is similar to the well known histogram of oriented gradients from computer vision. Since the detector is trained using machine learning, it can always be extended to new eye movement types. We evaluate our feature against the state-of-the-art on publicly available data. The evaluation includes different machine learning approaches such as support vector machines, regression trees, and k nearest neighbors. We evaluate our feature together with the machine learning approaches for different parameter sets.

Description Rule Lerner

Eye movements hold information about human perception, intention, and cognitive state. Various algorithms have been proposed to identify and distinguish eye movements, particularly fixations, saccades, and smooth pursuits. A major drawback of existing algorithms is that they rely on accurate and constant sampling rates, error free recordings, and impend straightforward adaptation to new movements, such as microsaccades, since they are designed for certain eye movement detection. We propose a novel rule-based machine learning approach to create detectors on annotated or simulated data. It is capable of learning diverse types of eye movements as well as automatically detecting pupil detection errors in the raw gaze data. Additionally, our approach is capable of using any sampling rate, even with fluctuations. Our approach consists of learning several interdependent thresholds and previous type classifications and combines them into sets of detectors automatically. We evaluated our approach against the state-of-the-art algorithms on publicly available datasets.

Example

Automatic Identification of Eye Movements

I-BDT

The Bayesian Decision Theory Identification (I-BDT) algorithm was designed to identify fixations, saccades, and smooth pursuits in real-time for low-resolution eye trackers. Additionally, the algorithm operates directly on the eye-position signal and, thus, requires no calibration.

Downloads

- Matlab Implementation

- C++ Reimplementation

- Datasets containing fixations, saccades, as well as straight and circular smooth pursuits

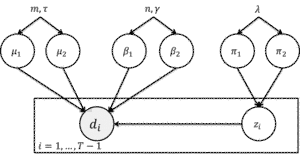

Bayesian Online Clustering of Eye Movements

The task of automatically tracking the visual attention in dynamic visual scenes is highly challenging. To approach it, we propose a Bayesian online learning algorithm. As the visual scene changes and new objects appear, based on a mixture model, the algorithm can identify and tell saccades from visual fixations.

The source code for use with Visual Studio is included in the ScanpathViewer Software. Scanpath Viewer is a visualization tool for eye-tracking recordings. It can produce customizable, animated heatmaps and scanpath graphs.

[Download]